Archive

Big Data : Parallelism and Hadoop:Basics

Let me start this blog by putting up two scenarios in front you:

Scenario I: You are given a bucket full of mixed fruits. There are 3 different kinds of fruits say apple mango and banana. Now how would you calculate the total number of apple, mango and banana in the bucket?

The simplest answer would be to count the fruits taking one by one and in the end getting the required result.

Scenario II: Now suppose instead of a bucket of fruits, you are given a Truck full of mixed fruits. How would you count the total number of individual fruit this time?

The most feasible approach would be to divide the work (instead of count the entire fruit truck one by one). We would take up one basket each full of fruits [mixed up fruits] and give it to different people[WORKER/SLAVE]. Each people count their own basket (irrespective of any communication between the two) and in the end we [MASTER] sum the results of each basket to get the result. Using this approach we would save time and effort [if you would agree].

The most feasible approach would be to divide the work (instead of count the entire fruit truck one by one). We would take up one basket each full of fruits [mixed up fruits] and give it to different people[WORKER/SLAVE]. Each people count their own basket (irrespective of any communication between the two) and in the end we [MASTER] sum the results of each basket to get the result. Using this approach we would save time and effort [if you would agree].

Well, if you are still wondering why I started off with this scenario, then I have to say that HADOOP is built on this simple basic principle. The above scenario describes as something in technical terminology called as “Parallel processing or distributed system programming”. There is concept of Master – Worker in parallel processing system. Master divides the work and the worker does the allotted work. The work done by each worker is sent back to the Master.

Similar is the situation with BIG DATA. There is plenty of data available (just like the truck of fruits) which one cannot handle alone and most importantly the 3-V [volume, variety and velocity] factor of the BIG DATA. So to handle such a situation Apache came up with HADOOP – a high performance distributed data and processing system that can store any kind of data from any source at a very large scale and can do very sophisticated analysis of the BIG DATA.

Hadoop architecture is mainly based on the following two components:

1. HDFS [Hadoop Distributed File System]:

It is more of a storage area for Hadoop. Whenever a data arrives at the cluster*, the HDFS software breaks it into pieces and distributes to the participating servers in the cluster.

2. MapReduce:

As the data is stored as fragments across various servers, MapReduce uses its programming logic to compute the required job on these server data and later return the result back to the Master Server. The computation happens locally and parallel across all servers in the cluster [Master – Worker concept].

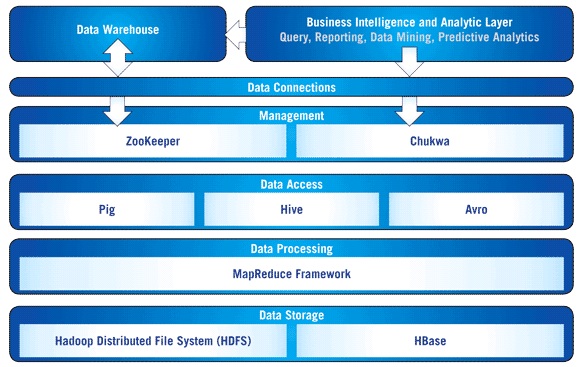

The picture above describes the Hadoop Ecosystem, which will be explained in details in my later blogs. I hope I am clear with the parallel distributed concept. This concept will be useful in understanding the architecture of Hadoop.

[A bit of History on Hadoop: Hadoop was created by Doug Cutting, who named it after his son’s elephant toy. Hadoop was derived from Google’s MapReduce and Google File System (GFS) papers. Hadoop is a top-level Apache project being![]() built and used by a global community of contributors, written in the Java programming language. Yahoo! has been the largest contributor to the project, and uses Hadoop extensively across its businesses.]

built and used by a global community of contributors, written in the Java programming language. Yahoo! has been the largest contributor to the project, and uses Hadoop extensively across its businesses.]

FAQ:

*cluster – A computer cluster consists of a set of loosely connected computers that work together so that in many respects they can be viewed as a single system. The components of a cluster are usually connected to each other through fast local area networks, each node (computer used as a server) running its own instance of an operating system. Computer clusters emerged as a result of convergence of a number of computing trends including the availability of low cost microprocessors, high speed networks, and software for high performance distributed computing

Computer clusters emerged as a result of convergence of a number of computing trends including the availability of low cost microprocessors, high speed networks, and software for high performance distributed computing

[Source: Wikipedia [Hadoop History] and Google]

Big Data : An Introduction

Hey guys, I am back to blogging after a pretty long gap. Since my last blog I have been going through data warehousing stuffs. In the midst of my learning data warehousing techniques, I came to know about a bigger issue which is troubling IT companies. It’s called BIG DATA. So I thought to share my knowledge on this advanced business analytic with you guys.

stuffs. In the midst of my learning data warehousing techniques, I came to know about a bigger issue which is troubling IT companies. It’s called BIG DATA. So I thought to share my knowledge on this advanced business analytic with you guys.

If you are thinking BIG DATA deals with “data which are big in nature”, then I have to say you are perfectly correct. But if your brain is limited to the database tables with 1000 rows to 100K rows; then I fear BIG DATA is something bigger and messier than this. Well, a formal definition on BIG DATA would go as:

“Big data is a term applied to data sets both structured and unstructured, whose volume is more than the capacity of commonly used software tools to capture, manage, and process the data with usual database and software techniques within an acceptable time.”

Today, companies face a serious issue. They have access to lots and lots of data and they have no idea what to do with those data. An IBM survey shows that over half of the business leaders today realize that they don’t have access to insights they need to do their jobs. These data normally are generated from the log files, IM Chats, Facebook chats, emails, sensors, etc. These data are raw in nature and is something you won’t find in database table (row-column) format. It’s accumulated from the day to day activity from the work of each and every associate. Companies are trying to access these data store to derive some business intelligence and strategies. BIG DATA is not about relational database but of the data which has got no relations to each other.

BIG DATA can be classified basically into three different categories based on data characteristics:

1. VOLUME:

There is huge amount of data that are being stored in the world. In the year 2000, there is around 800,000 petabytes (1 PB = 1015 bytes) of data stored in the world. The volume of data is growing rapidly. Companies have no idea what to do and  how to process these data. Twitter alone generates more than 7 petabytes of data everyday and Facebook generates around 10PB of data alone. This value is growing exponentially companies. Some Enterprises generate terabytes of data every hour of every day of the year. It won’t be wrong to think that we are drowning deep in the ocean of data. By 2020, it is expected to reach 35 zettabytes (1 ZB= 1021 bytes).

how to process these data. Twitter alone generates more than 7 petabytes of data everyday and Facebook generates around 10PB of data alone. This value is growing exponentially companies. Some Enterprises generate terabytes of data every hour of every day of the year. It won’t be wrong to think that we are drowning deep in the ocean of data. By 2020, it is expected to reach 35 zettabytes (1 ZB= 1021 bytes).

2. VARIETY:

With huge volume of data comes another problem i.e. Variety. With the onset of rapid technology usage, data is not only limited to just relational database, but it has grown to the raw un-structured and semi-structured data mainly coming from web pages, log files, emails, chats, etc. Traditional systems struggle to store and perform required analytics to gain intelligence because most of the information generated doesn’t lend itself to traditional database technologies.

3. VELOCITY:

Velocity is one characteristic of BIG DATA that deals with how fast a data is being stored and used for analytics. In BIG DATA terminology, we are looking at a volume and variety aspect also. So, thinking on the rate of arrival of data along with the volume and variety, is something a traditional database technology could hardly handle. As per the survey is concerned, around 2.9 million of emails are sent every second, 20 hrs of video is uploaded every minute in YouTube and around 50 million tweets per day in Twitter. So I think you can imagine the velocity of data come at you.

There is also another characteristic of BIG DATA, which is VALUE. A value aspect of big data is something all companies are looking forward to. Unless you are able to derive some business intelligence and value of these data present, then there is no use of such data. In simple terms, Value deals with what the present unstructured raw data can get a meaningful statistics so that it can be useful in taking proper business decisions.

Companies are trying to extract all the information possible and derive better intelligence out of it and to gain a better understanding of the customers, marketplace and the business. Few technical solutions like HADOOP (which I will explain in my next blog), NoSQL, DKVS databases, etc. are combating BIG DATA problems.

understanding of the customers, marketplace and the business. Few technical solutions like HADOOP (which I will explain in my next blog), NoSQL, DKVS databases, etc. are combating BIG DATA problems.

For now all I could conclude is that the right use of BIG DATA will allow analysts to spot trends and give niche insights that help create value and innovation much faster than the conventional methods. It would also help in better meeting consumer demand and facilitating growth.

Comments